In this article for eeNews Europe, we consider the intellectual property (IP) issues in relation to likely developments in AI hardware.

In recent years, we have seen the use of artificial intelligence (AI) and machine learning (ML) expand into a more varied range of computer and mobile applications. Now, in a similar fashion to how the spread of low-cost graphics processing units (GPUs) enabled the deep learning revolution, it is hardware design (as opposed to algorithms) that is predicted to provide the foundations for the next big developments.

With companies – from large corporates to startups and SMEs – vying to establish the fundamental AI accelerator technology that will support the AI ecosystem, the protection of intangible assets, including IP, will come to the forefront as one of the key aspects for success in this sector.

A huge increase in the size of ML models (roughly doubling about every 3.5 months) has been one of the key driving forces in the growth of ML model accuracy over recent years. In order to maintain this almost Moore’s- law growth in complexity, there is a clear demand in the market for new types of AI accelerators that can support more advanced ML models, for both training and inference.

One area of AI that would particularly benefit from new AI chips is AI inference at the edge. This relatively recent trend of running AI inference on a device itself, rather than on a remote (typically cloud) server offers many potential benefits such as removing latency in processing and reducing data transmission and bandwidth, and it may also increase privacy and security. In light of these advantages, the growth of the edge AI chips market has been remarkable – the first commercially available enterprise edge AI chip only launched in 2017, yet Deloitte predicts that more than 750 million edge AI chips will be sold in 2020.

The global AI chip market as a whole was valued at $6.64bn in 2018, and is projected to grow substantially in upcoming years, to reach $91.19bn by 2025, increasing at compound annual growth rate of 45.2%. Understandably, a wide range of companies are, therefore, working to develop AI chips. However, the market is poised to go through a growth cycle similar to those seen in the CPU, GPU and baseband processor markets, ultimately maturing to be dominated by a few large players. IP, and patents in particular, have been key to the success of household names such as Intel, Qualcomm and ARM, and it will likely play a similarly prominent role in the AI chip arena.

The range of companies competing in the AI chips market spans from ‘chip giants’ such as Intel, Qualcomm, ARM or Nvidia, through to traditionally internet-focused tech companies (e.g. Alphabet or Baidu) and numerous niche entities including Graphcore, Mythic, or Wave Computing. Various large corporations that would normally seem like ‘outsiders’ in a chip market are also involved – for example, since the vast majority of edge AI chips (90%) currently go into consumer devices, many smartphone manufacturers have not missed this opportunity and developed their own AI accelerators (e.g. Apple’s eight-core Neural Engine used in its iPhone range).

The race currently remains open as to who may dominate. Both technical specialists and investors will be looking closely at which companies’ technology shows most promise, and the field will inevitably evolve through investment, acquisitions and failures. Within the next few years, we can expect to see the market leaders emerge. Who will become to AI chips what Intel has become to CPUs (77% market share), and what Qualcomm is to baseband processors (43% market share)?

The current frontrunners appear to be Intel and Nvidia. According to Reuters, Intel’s processors currently dominate the market for AI inference, while Nvidia dominates the AI training chip market. Neither Intel nor Nvidia are resting on their laurels, as showcased by their recent acquisitions and product releases that seem to be aimed at ‘dethroning’ one another. Just in December 2019, Intel acquired Habana Labs, an Israel-based developer of deep learning accelerators, for $2bn.

Habana’s Goya and Gaudi accelerators include a number of technical innovations such as support for Remote Direct Memory Access (RDMA) – direct memory access from one computer’s memory to that of another without using either computer’s operating system – a feature particularly useful for massively parallel computer clusters and thus for the training of complex models on the cloud (where Nvidia currently dominates). Nvidia, on the other hand, recently released its Jetson Xavier NX edge AI chip with an impressive up to 21 TOPS of accelerated computing, directed in particular at AI inference.

Several smaller entities also look exciting, such as Bristol-based Graphcore, or Mythic based in the US. Graphcore recently partnered up with Microsoft, raising $150mat a $1.95bn valuation. Their flagship product – the Intelligence Processing Unit (IPU) – has impressive performance metrics and an interesting architecture – e.g. the IPU holds the entire ML model inside the processor using In-Processor Memory to minimise latency and maximise memory bandwidth. Mythic’s architecture is equally noteworthy, and combines hardware technologies such as computing-in-memory (removing the need to build a cache hierarchy), a dataflow architecture (particularly useful for graph-based applications such as inference), and analog computing (computing neural network matrix operations directly inside the memory itself by using the memory elements as tunable resistors). Mythic is not lagging behind Graphcore in commercial aspects either – it added $30m in funding in June 2019 from household investors such as Softbank.

At this stage it is unclear who will eventually dominate the AI chips market, but a key lesson from historical developments, such as in the fields of CPUs and baseband processors, is that IP rights play a big role in who comes out on top, and who survives in the long run.

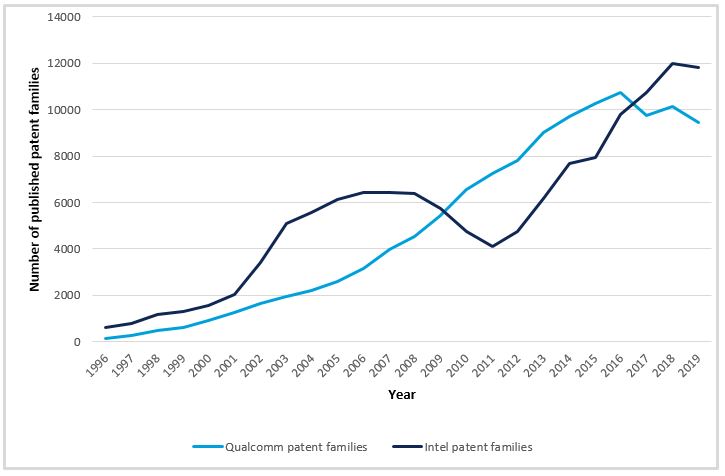

The importance of a strong patent portfolio for commercial success in chips markets is demonstrated by the number of patent filings of companies such as Intel or Qualcomm. These have been increasing since 1996 and now sit at about 10,000 published patent families per year. Considering the inherent possibility of reverse engineering a chip design and the common use of the fabless model in the industry, it is difficult for any entity to protect their IP without a patent portfolio, complemented by other forms of protection such as trade secrets (or ‘know-how’).

A number of market leaders in the chip industry have built their business model around patent licensing. Notable examples include Qualcomm and ARM Holdings. Although Qualcomm derives most of its revenue from chip-making, the bulk of its profit comes from its patent licensing businesses. Qualcomm’s licensing business may have suffered over the past two years, but this was largely due to a dispute with Apple, which has now been resolved with a one-time settlement of $4.5bn from Apple to Qualcomm, and a six year licensing deal between the two companies going forward.

ARM goes one step further, generating practically all its revenue from IP licensing, without ever selling its own chips. Patent licensing has been very profitable for Qualcomm and ARM, and it will likely be equally profitable for companies who build a strong patent portfolio in the AI chips area. ARM’s business model will present an attractive option for startups not having the resources to get involved in chip manufacturing, and the incentive to mitigate risk by remaining fabless will remain strong even as smaller companies grow.

For startups that may be minded towards being acquired, there is little doubt that IP is vital for the strongest valuations. It is unlikely that Intel would have acquired Habana in late 2019 for $2bn if it weren’t for Habana’s patent portfolio stretching back to 2016, or that Graphcore would have partnered-up with Microsoft and obtained its current valuation of $1.95bn if they didn’t have over 60 patent families (groups of patents sharing the same initial patent filing) to their name. Investor exit strategies, therefore, continue to dictate the need for a sound IP strategy.

Another key lesson from related sectors is the very large risk and reward associated with patent infringement. As recently as January 2020, Apple and Broadcom were ordered to pay $1.1bn in damages for infringing Cal Tech patents on Wi-Fi technology, which the court ruled was used in Broadcom’s wireless chips. According to Qualcomm and Intel patent families Bloomberg, this was the sixth largest patent-related verdict ever. The need for companies to build their own patent portfolios for both offensive and defensive purposes (a ‘defensive’ portfolio implying a threat of a countersuit thereby protecting from patent suits by competitors), therefore, remains clear.

Companies are not overlooking IP issues, with records showing that there are already more than 2,000 patent families in the field of AI chips. The filing of new patent applications is increasing rapidly – Intel alone has filed 160 patent applications for AI chips in the last five years. Existing market leaders as well as new entrants should, therefore, take note of Intel’s approach and be mindful not to miss the importance of IP protection for their inventions, particularly during the early stages.

The legal environment surrounding IP, and in particular patent law, has changed considerably over the last two decades. It is also the case that the ever increasing volume of historical patents and technical publications continues to intensify demands on both patent offices and patent owners seeking to maintain patent quality. However, there can be no doubt that IP will once again prove crucial in this emerging industry. Experienced technologists and IP practitioners will use lessons from the past to refine their strategies, and those companies with the right approach will succeed not just on the merits of their technology but on leveraging their IP to best advantage.

This article was first published in eeNews Europe in March 2020.